Understanding Shapley Values in Machine Learning

Key Takeaways

- Shapley values are a method from cooperative game theory, used in machine learning to fairly attribute the contribution of each feature value in the prediction.

- Shapley values help provide an in-depth explanation of how machine learning models make predictions.

- The Shapley value computation time increases exponentially as the number of features increases.

- Although Shapley values have numerous advantages, they also have several disadvantages, such as requiring a significant amount of computation time and the risk of misinterpretation.

- Different software solutions and alternatives are available to calculate Shapley values, including iml, fastshap, Shapley.jl, and SHAP.

Introduction

Shapley values, a concept from cooperative game theory, has found its way into the realm of machine learning. Named after Lloyd Shapley, a mathematician and economist, Shapley values provide a method of fairly attributing the contribution of each feature value in the prediction of a machine learning model.

But how do Shapley values work in the context of machine learning? How are they computed? What are their advantages and disadvantages? And what software solutions exist for calculating Shapley values? This article aims to provide detailed answers to these questions.

Understanding Shapley Values

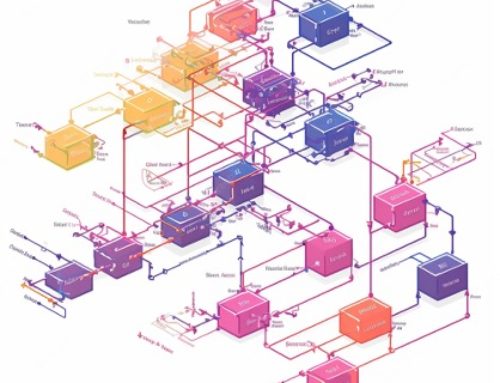

In the world of cooperative game theory, each player’s contribution to the total payout is awarded according to the Shapley value. When applied to machine learning, each feature value of an instance is considered a “player” in the game, with the prediction being the payout.

The Shapley value of a feature value is its average marginal contribution across all possible combinations of feature values. It is used to explain the difference between the actual prediction and the average prediction.

Computation of Shapley Values

The computation of the Shapley value for a feature value involves evaluating its contribution when it is added to a coalition of other feature values. This is done by simulating a situation where only the selected feature values are in a coalition. The contribution is then calculated as the difference between the predictions made with and without the feature value in question.

Unfortunately, the computation time for Shapley values increases exponentially with the number of features. To manage the computation time, contributions are usually computed for only a few samples of the possible coalitions.

Examples of Shapley Values in Action

Shapley values can be used for both classification and regression in machine learning. For instance, they can be used to analyze the predictions of a random forest model predicting cervical cancer or to predict the number of rented bikes for a day, given weather and calendar information.

Advantages of Shapley Values

One significant advantage of Shapley values is that they fairly distribute the difference between the prediction and the average prediction among the feature values of the instance. This unique property sets Shapley values apart from other methods such as LIME.

Furthermore, Shapley values are backed by solid theory and satisfy several properties, including efficiency, symmetry, dummy, and additivity, which together define a fair payout.

Disadvantages of Shapley Values

Despite their advantages, Shapley values have several downsides. One major drawback is the significant computation time required, making the exact computation of Shapley values feasible for only a few features.

Another potential issue is the risk of misinterpreting the Shapley value. It’s important to understand that the Shapley value is the average contribution of a feature value to the prediction in different coalitions, not the difference in prediction when we would remove the feature from the model.

Software and Alternatives

There are several software solutions available for calculating Shapley values. For R users, the iml and fastshap packages offer Shapley values computation. In Julia, you can use the Shapley.jl package.

Additionally, alternatives to Shapley values exist. SHAP, proposed by Lundberg and Lee, is based on Shapley values but can also provide explanations with fewer features. Another approach called breakDown, implemented in R, also shows the contributions of each feature to the prediction but computes them step by step.

Conclusion

In conclusion, Shapley values in machine learning provide a valuable method of fairly attributing the contribution of each feature value in a prediction. While the computation of Shapley values can be complex and time-consuming, it offers a unique insight into how machine learning models make predictions. As machine learning continues to evolve and expand, techniques like Shapley values will remain essential tools in the data scientist’s arsenal.

References

https://developers.google.com/machine-learning/crash-course

https://www.freecodecamp.org/learn/machine-learning-with-python/

Are you interested in AI but don’t know where to start? Want to understand the role of an AI Architect? Check out our page and watch our informative video.